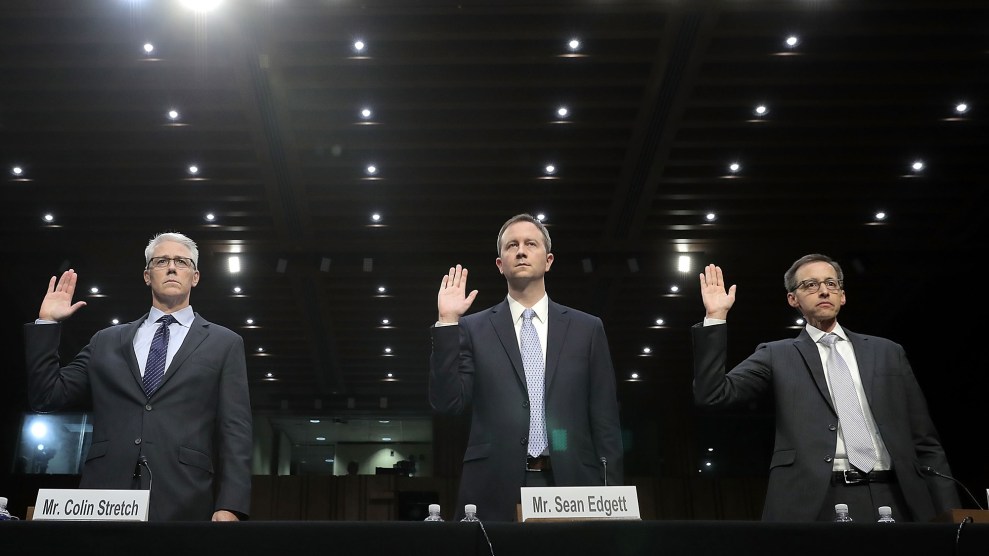

Employees from Facebook, Google, and Twitter are sworn in at a 2017 Senate hearing on Russian disinformation.Chip Somodevilla/Getty

After the 2016 election, Mark Zuckerberg notoriously dismissed the idea that Facebook had any role in the outcome as “crazy.”

Experts were skeptical of his denial, and Zuckerberg officially ate his words the next year when the Washington Post broke news that Facebook’s own internal investigation had revealed that Russians had created hundreds of accounts in an attempt to influence American politics. Their mission, special counsel Robert Mueller later determined in an indictment charging 13 Russians affiliated with the country’s Internet Research Agency for their actions during the election, was “to sow discord in the U.S. political system.”

Since the 2016 presidential elections, foreign governments, politicians, and trolls from Russia and beyond have undertaken their own disinformation campaigns targeting elections around the world. Their tactics and strategies offer clues to who might be involved in spreading misinformation in 2020 and the messages they will share.

Camille Francois, chief innovation officer at the social media security firm Graphika, said that while misinformation efforts abroad often portend what will happen, companies and lawmakers in the United States haven’t learned to paid enough attention to what’s happening in foreign countries.

“It’s frustrating that the US doesn’t look internationally, because if you think of 2016, the IRA had been at work on Russians domestically, in Ukraine. They had been doing to what they did to the U.S. in 2016 for many years,” she said.

Francois pointed to how misinformation and disinformation have been spread in recent elections in the United Kingdom, the Philippines, and Taiwan as potentially instructive on similar attempts to influence the 2020 American elections.

Events in those countries help show how the landscape of misleading online political information is shifting. It’s not just Russia or major actors akin to its IRA. Disinformation and manipulation are coming from domestic outfits, as seen in Rodrigo Duterte’s Philippines, from a greater number of countries including China and Iran, and from a range of private entities like the Archimedes Group or Psy-Group, both run out of Israel.

Private Groups

The extent of private attempts to influence US politics is unclear, but there have been several examples since 2016. Experts believe more are inevitable.

“We’ve done a number of takedowns of private companies that are increasingly trying to offer services in this space,” Nathaniel Gleicher, Facebook’s chief security officer, told Mother Jones. “We’ve also had takedowns of companies based in the Philippines, so we’re certainly seeing nongovernment actors engage here.”

In May, Facebook revealed that it had banned a network of over 200 accounts linked to the Archimedes Group targeting half a dozen African countries along with users in Latin America and Southeast Asia.

After Alabama’s 2017 special Senate election, the Washington Post found that the social media firm New Knowledge ran a disinformation campaign during the election in support of victorious Democratic candidate Doug Jones, in what it described as an “experiment” funded by the tech billionaire Reid Hoffman.

By 2018, special counsel Robert Mueller had begun probing Psy-Group, a private “intelligence firm” staffed by former Israeli spies that carried out online social media “influence campaigns,” according to one internal document obtained by the Wall Street Journal. According to reports in the New Yorker and the New York Times, the group may have attempted to influence an American hospital board election and pitched the Trump campaign on its influence services.

Even harder to spot than private firms running influence operations are independent political trolls who act on their own and aren’t explicitly organized.

“One of my concerns going forward is quick, small-scale operations. We’re in the paradox where in 2016 you had Russia actors trying to pose as American trolls. Now you’re in the situation of American trolls are posing as Russian actors,” social media researcher Ben Nimmo, a fellow at the Atlantic Council’s Digital Forensics Lab said.

Lone actors can be difficult or impossible to track. It’s unclear, for example, who first created the doctored video that was used to justify revoking CNN reporter Jim Acosta’s White House press credentials. The video gained traction after being tweeted by a prominent Infowars reporter and White House Press Secretary Sarah Huckabee Sanders, but its provenance was never firmly determined. While there was more of a digital paper trail for hoaxes surrounding the migrant caravan that captured the Trump administration’s interest in the fall, there again was no single point of origin. Small time actors are usually only caught for being tremendously incompetent and indiscreet, like in the case of Jacob Wohl, a right-wing troll who was promptly banned from Twitter hours after he was quoted in a USA Today story, bragging about his methods of seeding misinformation on the platform. Nimmo warns that such one-off, rogue instances of disinformation committed not by countries but by trolls will not only continue to expand in 2020, but that they are especially difficult to stop.

There is a potential upside to a cacophony of new groups trying to bend political discourse to their own ends: all the noise might make it harder for any state actor to meaningfully dominate the conversation and affect the outcome of the campaign.

“Going into 2020 there’s so much disinformation from all these Jacob Wohl–type characters, that it would be harder for Russia to impact the election,” notes Clint Watts, a research fellow at the Foreign Policy Research Institute.

New Countries

Aside from private individuals and groups, countries could attempt to influence the election—and not just Russia.

From as early as 2018, Twitter and Facebook have disclosed finding Iranian political disinformation efforts on their platforms targeted at Americans, reflecting a battling between opposing factions to sway foreign opinion about the country. The Islamic Republic of Iran has spread messages backing Iran’s religious authority and posts critical of Saudi Arabia and other national rivals. A fringe opposition party, the People’s Mujahedin of Iran, has used its own troll farm to coordinate social media campaigns promoting regime change in Iran, and might still be doing it. These networks could become active if any 2020 candidates weigh in strongly on Iran or its top foreign policy priorities.

China is another country that the White House and members of Congress are particularly concerned about. An administration official told the Washington Post in September that they were watching closely for potential Chinese election influence efforts. No Chinese efforts to influence American politics online have to come to public light, but, more so than most nations, China has the capabilities to pull off interference on the scale of Russia’s 2016 intervention. China has already flexed its election interference abilities in recent elections in Cambodia and Taiwan.

In a December 2018 joint letter, senators Catherine Cortez-Masto (D-Nev.) and Marco Rubio (R-Fla.) wrote to Secretary of State Mike Pompeo and other top administration officials about concerning allegations by local authorities and independent observers that “that the CCP used illegal funds and disinformation to influence the election results in favor of the CCP’s strategic interest” in Taiwan. Experts also think that China tried to interfere in Cambodia’s 2018 elections via hacking.

Companies Say They’re Better Prepared

Security and integrity teams at social media companies say they’ve been learning lessons from these political influence operations and applying them to elections worldwide.

“I think every country we’ve worked in is different. But I think each country offers some new insights for future elections,” Facebook’s Gleicher said, citing how the company’s approach to the UK’s European Parliament elections in May was partially informed by lessons learned in Indian parliamentary elections this past spring.

Gleicher said that since 2017 the company has worked to create a “virtuous cycle” of identifying new influence operations and creating large scale solutions to stem them.

“As investigators identify a new threat, we work with the product teams to build out scaled solutions to make that threat more difficult to scale,” he said. “Then, going forward, more automated systems catch large chunks of that threat, and our investigators can turn to new challenges.”

Unlike in 2016, Facebook and other social media companies now have dedicated teams working to find attempts to influence elections, and are in contact with one another about new efforts to abuse their platforms.

A Twitter spokesperson explained that the company had joined other social media platforms and “established a dedicated, formal communications channel to facilitate real-time information sharing regarding election integrity.” Since 2017, Twitter has regularly booted batches of accounts involved in influence campaigns, and, unlike other companies, released public archives of their posts and made searchable versions available to researchers.

While Google has similarly publicly removed coordinated influence accounts from its platform, it declined to comment for this story.

Hacking

Regardless of what social media companies do to stop the spread of misinformation across their platforms, some experts think the biggest digital threats lie elsewhere.

“Hacking is the most effective thing the Russians did in 2016,” Nimmo said. “That was absolutely huge, far more than trolling.”

During the 2016 elections, Russia accessed state voting systems, hacked the Democratic National Committee, and obtained the emails of party operatives, including Clinton’s campaign chair, John Podesta.

“What it really comes to is, Will someone take the aggressive next step of hacking?” asked Watts. Russian hacking attempts targeting the 2016 election started at least the summer before the election, at that point, Watts noted, when Hillary Clinton was heavily favored to win the Democrats’ nomination. This time, starting targeting candidates so early might make less sense. “They can’t really hack two dozen campaigns,” Watts said.

The public is now more aware of influence efforts on social media, likely making the efforts less effective. But public awareness can’t protect election systems or stop the release of damaging information obtained through hacking efforts.

And hacking, which can probably do the most potential damage, remains an area of acute vulnerability. While tech companies have taken some steps to curb influence campaigns on their platforms, Republican Senate Majority Leader Mitch McConnell has declined to hold votes on election security legislation in advance of 2020, and experts think that Democratic campaigns probably aren’t doing enough to secure their campaigns.