I’m a big proponent of high-quality, universal pre-K. At the same time, I understand that the evidence in favor of it isn’t rock solid. Overall, I think the case for pre-K is fairly strong, but it’s a victim of the fact that it’s really hard to conduct solid research on long-term outcomes. In particular, there’s always the problem of scale: even if you get great results from a pilot program, there’s no guarantee that you can scale it nationwide and still maintain the same quality. This is a particular problem with Head Start, the longest-running and best known pre-K program in the country. It has been scaled, but multiple studies have suggested that it’s had disappointing results.

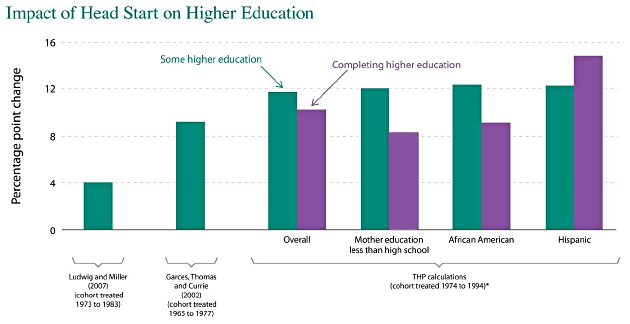

But time marches on, and this allows us to conduct new research as Head Start kids grow up. The longer the baseline, the better chance we have to truly measure differences in children who attended Head Start. On that score, we have some good news and bad news from the Hamilton Project.

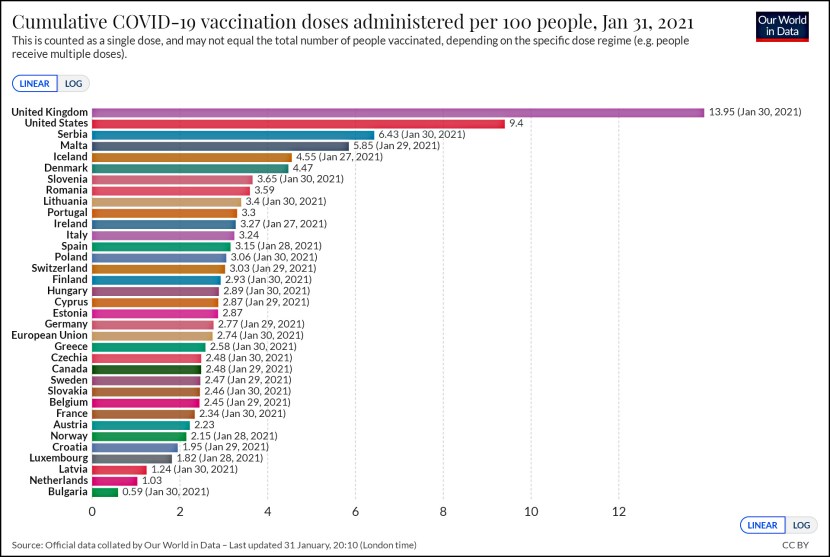

First the good news. The study compared children from the same families where one attended Head Start and the other didn’t. Their birth cohort started in 1974, and they used the 2010 edition of the National Longitudinal Survey of Youth, so their oldest subjects were in their thirties. What they found was more positive than previous surveys. For example, here’s the result on higher education (which includes licenses and certificates):

The Head Start kids started and completed higher education at substantially higher rates than kids who didn’t attend. The study shows similar results for high school graduation.

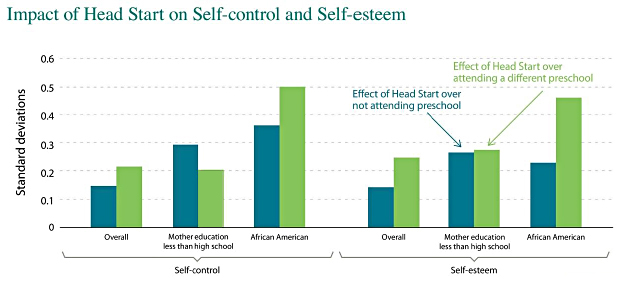

So that’s great. But one of the things we’ve learned about pre-K is that its biggest impact is often on non-cognitive traits. And sure enough, the Hamilton study showed strong effects on self-control and self-esteem:

So what’s the bad news? I should more accurately call this cautionary news, but take a look at those green bars. They show Head Start having a bigger effect compared to other preschools than it does compared to no preschool at all. That can only happen if the other preschools were collectively worse than doing nothing. In some cases the effect is pretty large, which in turn means these other preschools were a lot worse than doing nothing at all.

This is possible, of course. But it doesn’t seem all that likely, which raises questions about whether the data analysis here has some flaws. For the time being, then, I consider this tentatively positive news about Head Start. But I’ll wait for other experts to review the study before I celebrate too much.